Summary

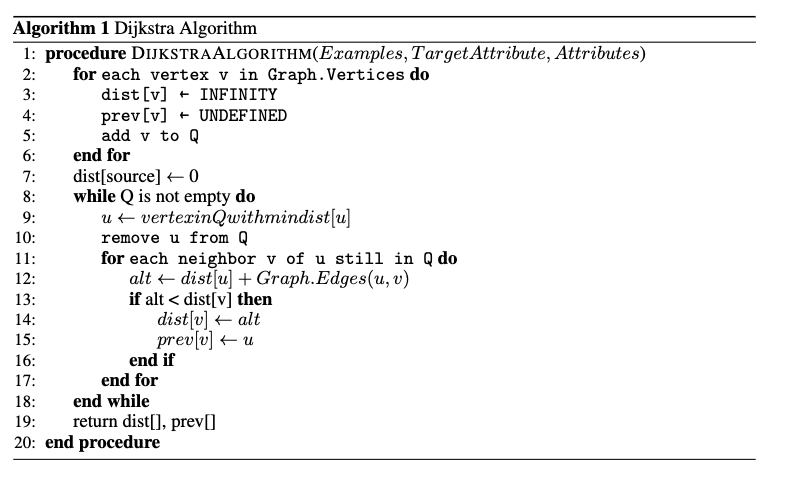

We are going to implement a parallel version of Dijkstra algorithm on both PIM system and GPU, and compare between two kind of architecture on the Graph workload.

Challenge

Despite being a well-studied shortest path graph search algorithm, parallel implementation of Dijkstra algorithm faces challenges:

Goal and Deliverable

Resource

The resource we need for this project is the GHC/PSC machine cluster, with openMP and MPI installed, and GPU core enabled. Also the access to the PIM system.